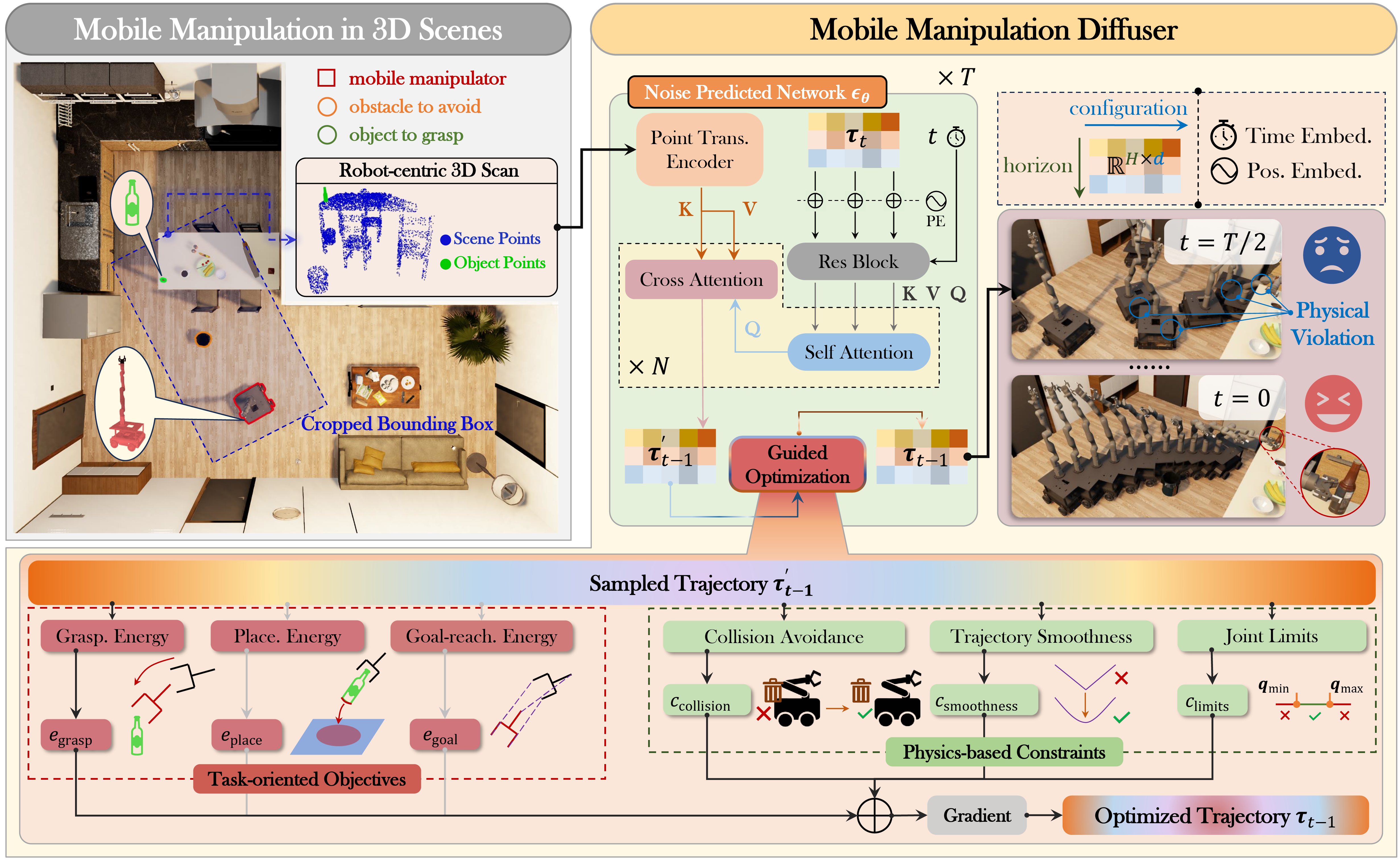

Recent advances in diffusion models have opened new avenues for research into embodied AI agents and robotics. Despite significant achievements in complex robotic locomotion and skills, mobile manipulation—a capability that requires the coordination of navigation and manipulation—remains a challenge for generative AI techniques. This is primarily due to the high-dimensional action space, extended motion trajectories, and interactions with the surrounding environment. In this paper, we introduce M²Diffuser, a diffusion-based, scene-conditioned generative model that directly generates coordinated and efficient whole-body motion trajectories for mobile manipulation based on robot-centric 3D scans. M²Diffuser first learns trajectory-level distributions from mobile manipulation trajectories provided by an expert planner. Crucially, it incorporates an optimization module that can flexibly accommodate physics-based constraints and task objectives, modeled as cost and energy functions, during the inference process. This enables the reduction of physical violations and execution errors at each denoising step in a fully differentiable manner. Through benchmarking on three types of mobile manipulation tasks across over 20 scenes, we demonstrate that M²Diffuser outperforms state-of-the-art neural planners and successfully transfers the generated trajectories to a real-world robot. Our evaluations underscore the potential of generative AI to enhance the scalability of traditional planning and learning-based robotic methods, while also highlighting the critical role of enforcing physics-based constraints for safe and robust execution.

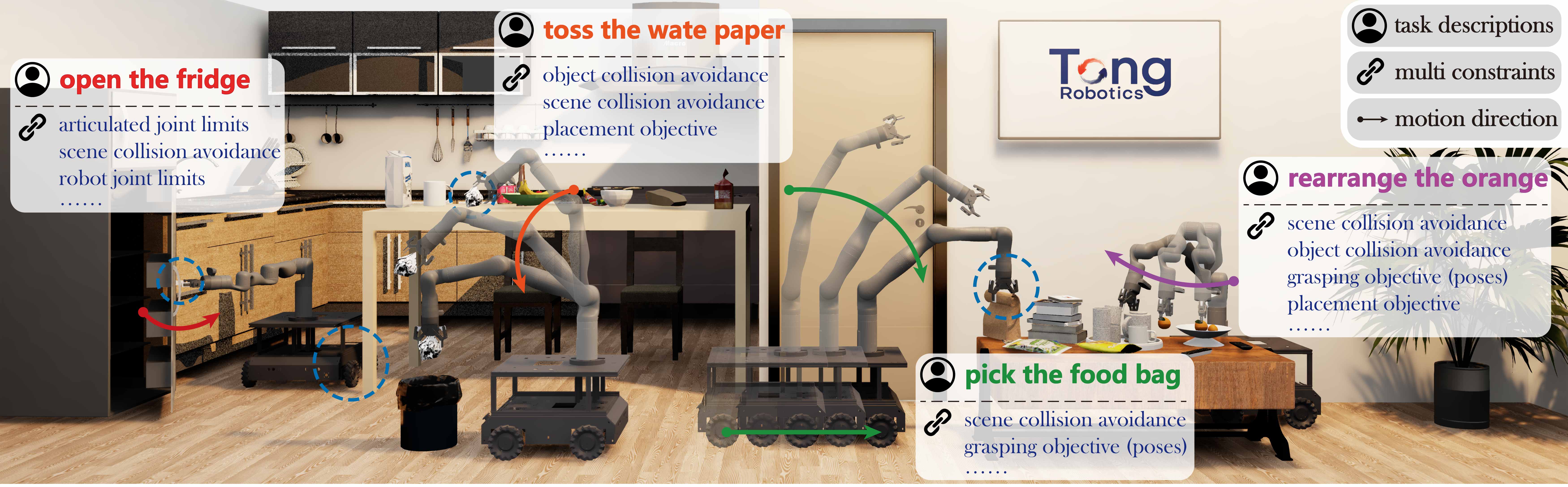

Mobile manipulation has been widely applied to tasks such as grasping, placement, and object rearrangement. An ideal motion planner must not only consider the environment's geometry to avoid collisions but also ensure that the generated trajectories are well-suited to the robot's embodiment (joint limits, robot kinematics and base-arm coordination) and capable of successfully completing the specific tasks (grasping or placement objectives).

In this work, we aim to design a neural motion planner that considers multiple constraints in mobile manipulation while generating motion trajectories. There are two key challenges to be solved: (1) how to acquire high-quality training data for high-dimensional mobile manipulation and (2) how to effectively integrate multiple constraints into motion generation framework for ensuring physical safety execution.

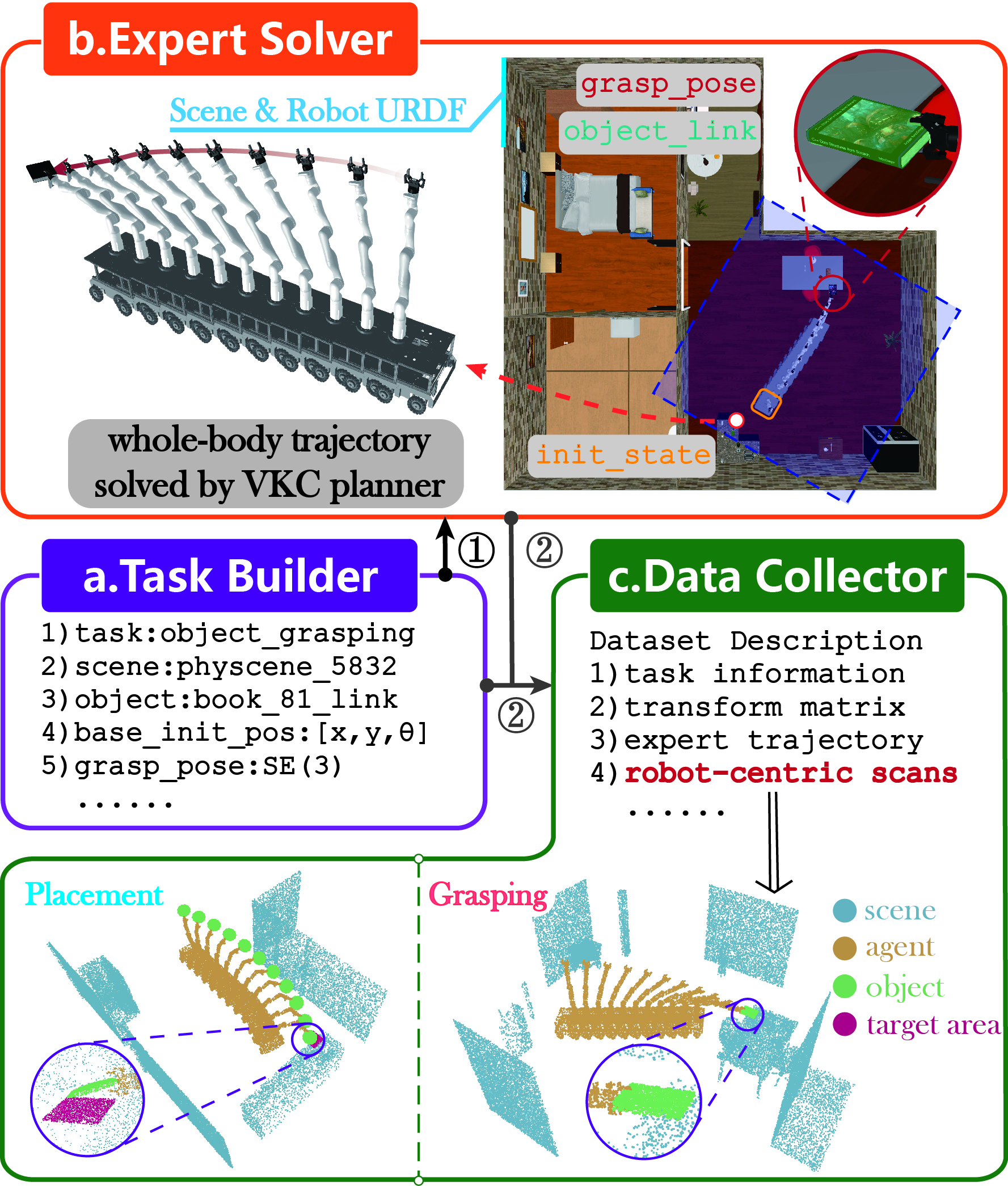

To obtain high-quality training data, we propose a dataset collection tool comprising three key components. (a) The Task Builder enables the construction of mobile manipulation tasks through high-level configurations, including scene and robot URDF, manipulated object link, target end effector goal, and task type. (b) The Expert Solver computes optimal whole-body coordinated trajectories by leveraging the VKC algorithm. (c) The Data Collector is responsible for recording the planned trajectories, and processing the segmented and calibrated point clouds, which are cropped from the perfect 3D scan based on the bounding box around the robot's initial state.

To effectively integrate multiple constraints into learning-based motion generation framework, we propose M²Diffuser, the first neural motion planner tailored for mobile manipulation in 3D scenes. M²Diffuser is a diffusion-based motion planner designed to sample and optimize whole-body coordinated trajectories directly from natural 3D scans. Utilizing robot-centric 3D scans as visual observations, it employs an iterative denoising process to gradually generate task-related whole-body motion trajectories conditioned on 3D environments (see the video on the left). M²Diffuser integrates multiple constraints into the denoising process, where optimization is achieved at each denoising step via differentiable physics-based cost and task-oriented energy functions. These functions can be explicitly defined or learned from data. This framework ensures the physical plausibility and task completion of the generated trajectories to the greatest extent.

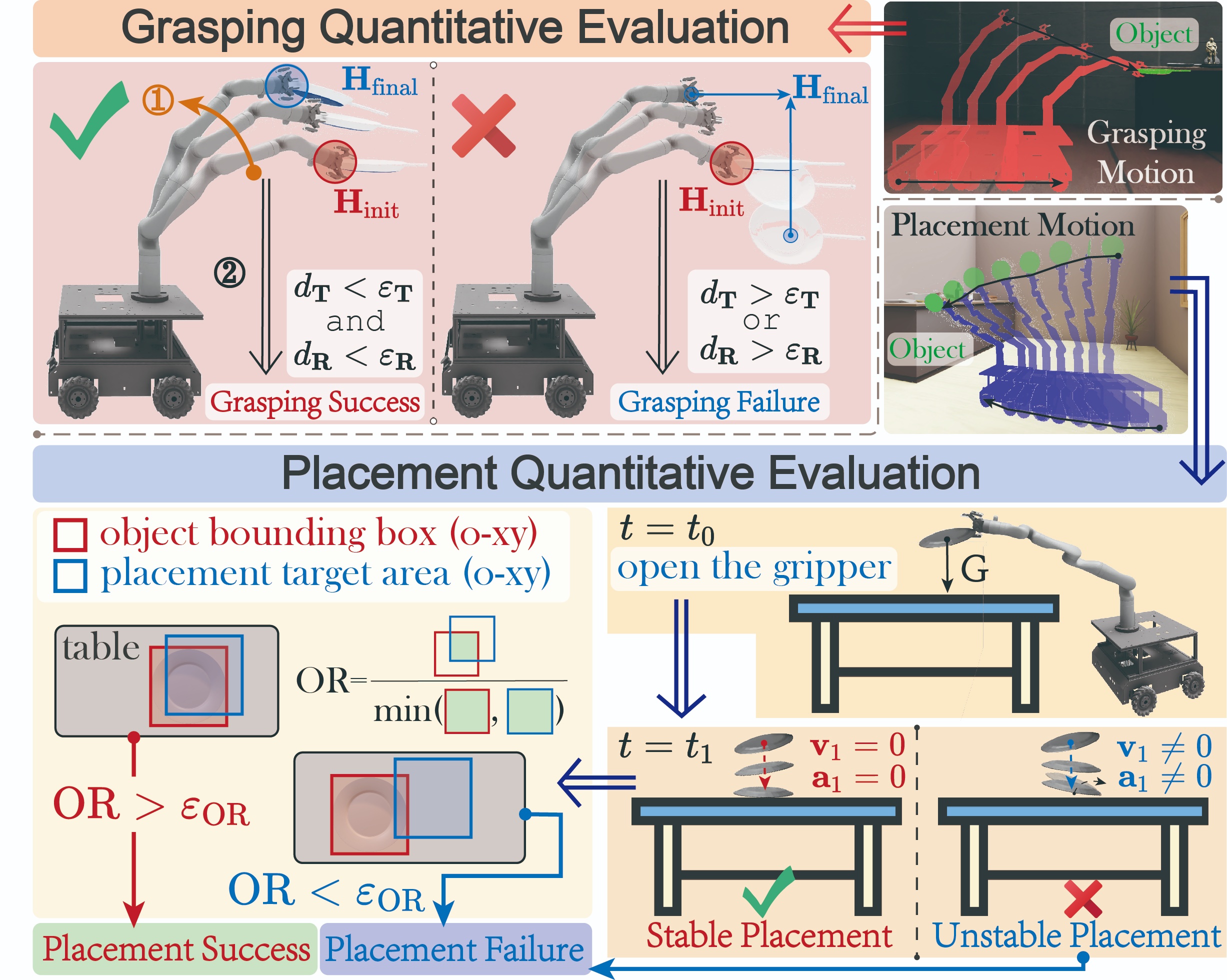

We utilize NVIDIA Isaac Sim to accurately and quantitatively evaluate physical grasping and placement within the simulated 3D environments, resulting in more reliable evaluations compared to previous studies.

We attempt to directly apply the model trained on simulated data to robotic pick-and-place tasks in real 3D scenes, achieving significant success. These videos demonstrate the successful mobile manipulation tasks, such as picking and placing bottles from a cabinet, as well as handing snacks to a person. Our work first achieve the seamless sim-to-real transfer in the domain of learning-based mobile manipulation. Previous works either failed to directly apply models trained in simulation to real-world scenarios or were limited to structured environments.

@article{yan2025m2diffuser,

title={M2Diffuser: Diffusion-based Trajectory Optimization for Mobile Manipulation in 3D Scenes},

author={Yan, Sixu and Zhang, Zeyu and Han, Muzhi and Wang, Zaijin and Xie, Qi and Li, Zhitian and Li, Zhehan and Liu, Hangxin and Wang, Xinggang and Zhu, Song-Chun},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2025},

publisher={IEEE}

}

@article{yan2024m2diffuser,

title={M2Diffuser: Diffusion-based Trajectory Optimization for Mobile Manipulation in 3D Scenes},

author={Sixu Yan and Zeyu Zhang and Muzhi Han and Zaijin Wang and Qi Xie and Zhitian Li and Zhehan Li and Hangxin Liu and Xinggang Wang and Song-Chun Zhu},

journal={arXiv preprint arXiv:2410.11402},

year={2024}

}